.png)

OVERVIEW

How might we reduce the cognitive burden that admissions reviewers experience during the reviewing process ?

PROBLEM

As the fields of machine learning and artificial intelligence continue to grow, so do the popularity of Computer Science graduate programs. But with this popularity comes an increasing number of applicants. While this is an exciting opportunity for Carnegie Mellon, how can the graduate application process be improved to help address the growing workload for admissions reviewers as well as envision the next generation of graduate application systems?

OUR APPROACH

For 7 months, our team worked to develop solutions based on user research including contextual inquiries, concept testing, and A/B testing. Ultimately, our goal was to reduce cognitive burden by creating designs that improve efficiency, effectiveness, and joy for reviewers in the system. We imagined ApplyGrad as a one-stop system that is functional, beautiful, and can provide actionable data and insights for reviewers.

SCOPE

UX Research, UX Design, Strategy, Prototyping

ROLE

UX Research Lead, UX Designer

TOOLS

Figma, Trello, Otter.Ai, Mural, Keynote, Grain, Maze

TEAM

Lia Slaton, Emily Zou, Yuwen Lu, Anna Yuan

DURATION

7 months

SOLUTION

We redesigned the ApplyGrad system and introduced new features that improved the efficiency, effectiveness, and experience of the reviewing process. We improved the visual hierarchy of the system, added features to help reviewers quickly begin their process, integrated features to enhance recall, and introduced interactions for a pleasant reviewing experience.

PROBLEM SPACE

The Hub of Admissions

Human reviewers process 10,000+ applications each year.

FLAGSHIP OF SCS

ApplyGrad is the flagship graduate admissions system of the School of Computer Science at Carnegie Mellon University that processes thousands of applications each year. It is a custom, internal system that is used by 7 departments and 47 different programs.

THE NEED FOR REDESIGN

From handling students’ application materials to evaluating candidates to reporting the numbers to administration, the system is a hub for almost all application needs. However, much of the design and infrastructure has gradually become outdated. Small differences in the system that do not map properly onto a user’s natural workflow have gradually led to increases in time and energy for evaluation.

DESIGN PROCESS

The Double Diamond

During the first four months, our research focused on discovering and defining the problem. During the last three months, we worked on developing prototypes and solutions that we could deliver to our clients.

.png)

OUR STAKEHOLDERS

Our stakeholders included reviewers, administrators, program directors, admissions heads, applicants, developers and much more.

We knew that the improvements we made would have rippling effects that benefit both the people who review and the applicants that flow through the system.

RESEARCH OVERVIEW

We conducted 100+ user sessions over 7 months.

and more...

RESEARCH FINDINGS

Key Research Findings Across Research

"It’s a comprehensive system but people have to use third-party things because of this lack of resource."

EXTRA TOOLS, EXTRA HELP

Reviewers turn to external tools primarily because it offers data manipulation features that ApplyGrad does not have.

"There's a lot of like moving back and forth between external spreadsheets and the ApplyGrad system."

PUTTING PIECES TOGETHER

Reviewers need to remember and combine pieces of information from different materials to form a holistic view of an application.

“There is a tension between wanting to be thorough and holistic and wanting to save time to jump to important things.”

BEING RIGHT OVER BEING FAST

Reviewers are frustrated with time-consuming, repetitive tasks, but prioritize properly evaluating applicants regardless of how long it might take.

REVIEWERS' NEEDS

The Three Needs Identified

After analyzing all the research insights we had uncovered, we summarized the three needs that reviewers have during the review process that are not well-addressed by the current system.

DESIGN DIRECTIONS

Three Major Areas of Improvement

The main areas that we wanted to focus on were in the documentation, data manipulation, and note-taking areas, which we identified as high-impact areas. Our decision was based on client needs and factors such as the number of overlapping research areas, time spent per reviewer, and the number of users affected.

DOCUMENTATION

DATA MANIPULATION

NOTE-TAKING

BUILDING A FOUNDATION

The Three Layers

LAYER 1: EFFICIENCY

We want to guarantee that the system can first help reviewers reduce their workload. This means running consistent usability and benchmark tests to ensure baselines are met.

LAYER 2: EFFECTIVENESS

The next step is to add functionality in critical areas that do not currently map into the user workflow. Building and testing new visuals, calibration, and features to assist the review process is essential.

LAYER 3: EXPERIENCE

A delightful UI can bring joy and increase both morale and mood. These emotional shifts in people can also transform behavior to promote consistency in evaluations.

OUR USERS

Who Are We Designing For?

ApplyGrad reviewers hope to make efficient, effective, and fair decisions for applicants. Our primary users are mostly focused on the evaluation of individual candidates. Similarly, secondary users are concerned with evaluations but in the context of balancing the incoming cohorts.

PRIMARY USERS

SECONDARY USERS

DESIGNING A SOLUTION

Designing for Efficiency

Re-designing the ApplyGrad system to reduce confusion and increase speed to get through key reviewer tasks.

Our Solution

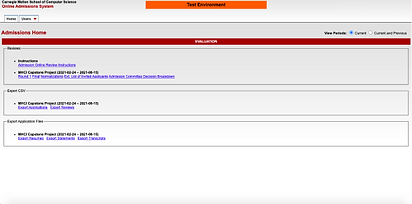

THE LANDING PAGE

.png)

DATA TABLE REARRANGEMENT

.png)

THE QUICK-REVIEW MODE

.png)

DESIGNING A SOLUTION

Designing for Effectiveness

Providing reviewers and admissions committees tools to aid in better decision-making and collaboration.

Our Solution

REVIEW FORM (BEFORE)

REVIEW FORM (AFTER)

.png)

DESIGNING A SOLUTION

Designing for Experience

Adding elements to support and improve the reviewer’s emotional experience.

.png)

Our Solution

DATA TABLE (BEFORE)

REVIEW FORM (BEFORE)

DATA TABLE (AFTER)

.png)

REVIEW FORM (AFTER)

.png)

MOVING FORWARD

A Multi-Stage Implementation Plan

We created a multi-stage implementation plan in order to deliver interface design improvements that can improve the efficiency of the review process while the more advanced features are built and refined in the system.

Short-term

Mid-term

Long-term

Focus on streamlining the design and fast implementations of keeping functionality in the system

Focus on building initial functionalities of progress view, highlighting, and data manipulation

Focus on developing more advanced features that go beyond functionalities and into design

IMPROVING THE REVIEW PROCESS

The Value of ApplyGrad

We believe that our solution provides four main value propositions to help improve the review process:

High Customizability & Generalizability

Our design can be adapted to fit the review process of different programs.

Reducing the Cognitive Burden

By incorporating the application materials and review form into one interface, reviewers can quickly cross-reference applicant materials and their notes.

Balancing the Cohort

The ability to see an overview of the applicant pool with reviewer’s evaluations can facilitate a quicker evaluation of the composition of cohorts.

Improving Retention

Improving the tools that reviewers use can additionally aid in retention and incentives for serving on admissions committees.